Raytracing

"When you live in a black-and-white world, the most important thing is contrast." -Neal Stephenson

When I was a kid, I got Bryce 3D for my birthday. I was fascinated by computer art and I thought this program was the shit. It had mathematical ties back to fractal geometry and Benoit Mandelbrot - which made it cooler to me - and it would produce the most magical sci-fi landscapes.

The designers, including Kai Krause, made Bryce a really beautiful program. The program itself was beautiful, which was quite unusual in the mid-90s, and they made it easy to make beautiful things too. Bryce had no real purpose, unlike Maya3D or Blender, it was just a beloved toy. You couldn’t do anything with it except play or show off.

Which I did. I sunk hours into building sci-fi looking scenes, sunsets, managing color palettes, manipulating planes and shapes, and getting things just right. And finally, when I knew my art rivaled the Sistine Chapel, I would hit the magic button: Render… and wait for minutes and sometimes hours for the final product.

Bryce was a raytracing program. When you hit Render, it would take the light sources in your 3D world and cast out rays into the virtual world and use a whole heap of geometry to evaluate how those rays reflect, react, or scatter against surfaces. Then it would update the values of the pixels based on those rays and add another layer. I would sometimes get halfway through a render, take a peak at how the image was turning out, cancel the render, and go make some adjustments. Maybe the texture on the mountains wasn’t what I was expecting and looked ugly with the blue sky. Or the sunrise needed to be more orange than yellow. Then I’d hit Render again and wait.

Udio came out in the spring and blew everyone’s minds, becoming the first viable generative music service. Someone described this as the first AI to pass the Turing test in music - you wouldn’t know it was AI generated. Give Dune the Musical a listen!

Naturally, I made some songs about my kids. And they were fun! The lyrics stayed on point and were sometimes inventive. The beats were good. Instruments and synth filled in the sound with multiple layers. My kids love them.

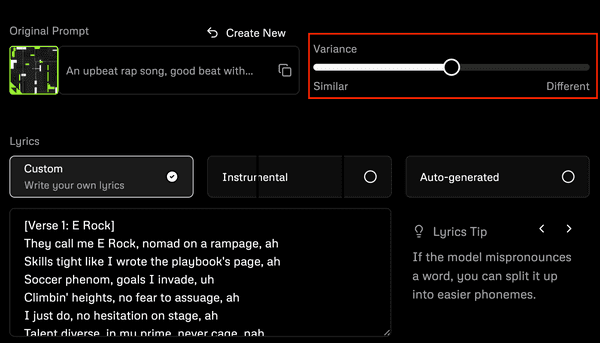

I wanted to change the beat or manipulate the lyrics a bit for a couple of the songs. Udio has a Remix button and here are your options:

How similar do I want it to be? Well I want it to be exactly the same just with a bit less snare and a modified bass line.

In more traditional music software, each of the sounds would be built up over different tracks and I’d be able to manipulate them individually to build up the sound. With AI, I can’t.

“when we train a large neural network to accurately predict the next word in lots of different tasks, what we are doing is that we are learning a world model.. it turns out that to just learn the statistical correlations in text, to compress them really well, what the neural network learns is some representation of the process that produced the text. This text is actually a projection of the world. There is a world out there, and it has a projection on this text.”

Language is a very messy higher dimensional problem. The matrices being fed into our massive banks of GPUs are really and truly huge - the number of dimensions is in the thousands or tens of thousands. AI image and audio generation tools work the same way using the same transformer architectures. But in imagery and music we have really well defined models for what these things look like: 3D structures and chord structures and times and pixels and ray traces and scatter textures. I’m not saying these are the best models or even the only models, but they are very good models of these worlds.

AI obviously has some kind of model of the world! The stuff it’s doing would have been considered sci-fi just 10 years ago. But nobody understands the underlying model. Nobody can manipulate or change it. The closest we get is “oh make it similar”. Midjourney has --cref and --cw to help with character consistency. This works to a degree but it is an ignorant command.

Getting specific details right matters a lot. Details reflect a strong understanding of both the world model and the nature of the prompt. For example, I asked Grok to make me an image with the following prompt:

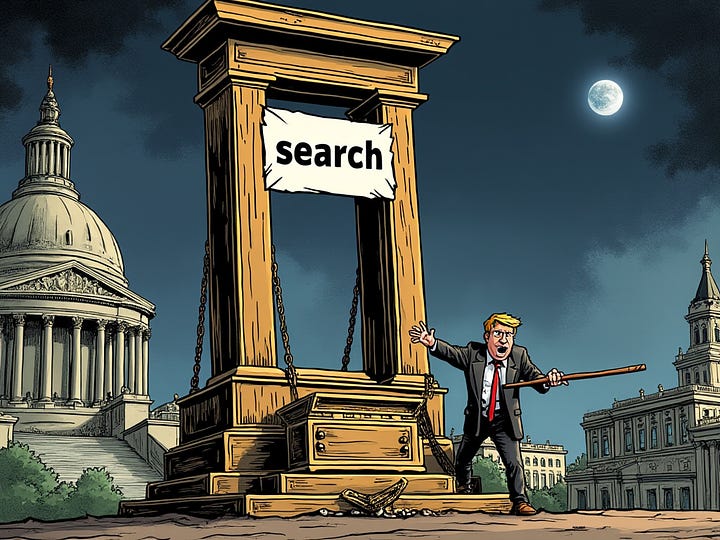

“I want a political cartoon style of a guillotine with ‘search’ on the chopping block and the guillotine being named ‘LLMs’“

Writing and letters are notoriously tricky in image generation, so I expected a struggle. Compare:

I favor the first image simply because it spelled “Search” correctly. But Search looks like the name of the guillotine not the thing getting its head chopped off.

AI commands are largely ignorant. We have no idea what we’re going to get. We’ve learned plenty of ways to access these models in different ways, but this is largely trial and error.

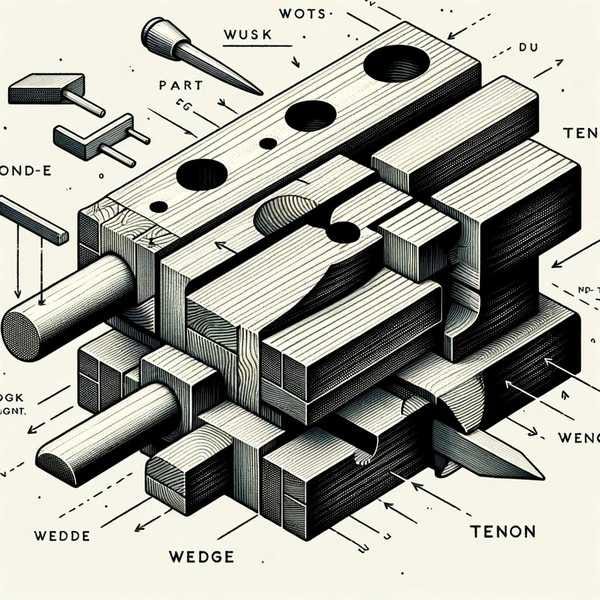

Awhile back, I was trying to remember what joinery these old woodworking benches used to make them portable. So I asked ChatGPT: “What are the joints called for the legs in a traditional Moravian workbench?” and got a fantastic answer:

In traditional Moravian workbenches, the legs are often attached to the top using a specific type of joint known as the “wedge tenon joint” or “tusk tenon joint.” This joint is a variation of the mortise and tenon joint, which is a fundamental woodworking joint. In the wedge (or tusk) tenon joint, a wedge-shaped piece is used to secure the tenon into the mortise. This design allows for a very strong connection that can be easily assembled and disassembled, making the Moravian workbench popular for its portability and sturdiness.

That’s exactly what I wanted. Next step: “Can you show a diagram?”

This is the woodworking version of a spaghetti sprawl of human fingers. There is zero precision and it is therefore useless. If these models are really are building a world model - and I still accept that they may be - there’s going to consistently be an upper bound to their utility based on our understanding of the model itself. It’s not enough for the AI to build an image from a one-shot or few-shot prompt. Humans need to be able to understand the model so that - like the shapes and structures in a raytracing program - we can manipulate details of the image. For now, we are almost entirely ignorant of the worlds these models are building.